Liz Magic Laser using Visage Technologies

Introduction

Artist Liz Magic Laser offers guided exercises and findings from her experimental research study. She brings together leading experts from divergent fields, EMDR therapist Dr. Ameet Aggarwal working in functional medicine and market research expert Dan Hill Ph.D. who specialises in face tracking. Hill’s most recent book, First Blush, 2019, uses eye movement tracking to understand how we engage with famous works of art in museums from the Sphinx to Tracy Emin’s My Bed.

In this research Laser explores how eye movement can be manipulated to affect our emotional states, questioning the psychological impact of eye tracking technology such as Apple’s new VR headset (Apple Vision Pro). It seems the choreographing of eye movement will be the next frontier in the user interfaces that will reshape our perceptual experience and possibly rewire our brains in the era to come. Advertisers use eye movement tracking to grab our attention, thrusting our brains into high alert mode and elevating stress. EMDR therapy uses eye choreography to combat the effects of trauma and reduce our stress levels. Laser has devised the following guided exercises to offer an insight into how face tracking market research tools work and how EMDR therapy feels.

I want people to have an embodied experience of eye movement manipulation that’s been developed for polar opposite aims: for therapeutic versus profit driven purposes. Can EMDR tools help us navigate the triggers we encounter in cyberspace and art spaces?

Liz Magic Laser

1. View the tracker hacked

Liz Magic Laser and her research participants play with a face tracking algorithm to influence how their emotional states are perceived.

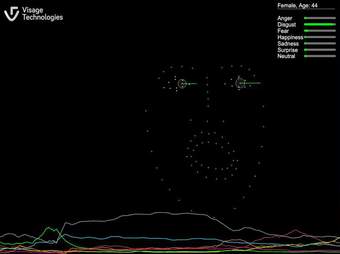

Laser conducted an experimental research study with participants who consented to Visage facial expression analysis while receiving EMDR therapy and informational briefings on eye tracking technology. The following animation shows Laser guiding participants to explore the facial expressions associated with the seven emotional states.

2. Test drive

Your turn for expression exploration.

The facial expression and eye movement tracking algorithm used in Laser’s research was developed for market research by a company called Visage Technologies. It uses motion tracking to estimate gender, age and emotional state. Please take a few minutes to familiarise yourself with its options: play with your expressions or toggle the different option boxes on and off. How does the tracker say you are feeling? Do you feel it is accurate? Does it resonate? Can you alter how the software analyses you?

Consider each emotional state defined by the software. Try to achieve the facial expression the tracker associates with each emotion.

Start with anger. Where in your face does the tracker locate anger? In your forehead? Try moving your eyes. Does squinting affect your anger level? What happens if you cast a sideways glance or look down toward the bottom of the screen? Now work your way through the list of emotions...

Liz Magic Laser using Visage Technologies. Courtesy of the artist and Visage Technologies AB.

3. Try EMDR

Experience Eye Movement Desensitization Reprogramming therapy with Dr. Ameet Aggarwal.

EMDR (Eye Movement Desensitisation Reprogramming) therapy uses simple eye movement choreography to help people process trauma. The logic is that bilateral stimulation of the two sides of the body helps you integrate the two hemispheres of the brain.

After trying EMDR with the guided video below, take some time to reflect. Did you feel eye movement can rewire your brain?

If eye movement can reduce our stress levels, is the opposite also possible? Can a fixed gaze at one point on a screen or erratic eye movement patterns instigated by pop ups have harmful effects on our mental state?

Our eye movement patterns are already being choreographed by advertisers. If emerging technology like Apple’s VR headset (Apple Vision Pro) uses eye movement tracking for navigation then it is already dictating eye movement patterns as well? User interfaces that rely on touch will likely be replaced by AI algorithms that predict our eye movement to determine where we move in virtual space and even for simple internet surfing. No longer will we rely on haptics: touch screens, mouse or joystick clicking. Are we moving further and further towards disembodiment?

4. Learn from an expert

Dan Hill gives the inside scoop on how eye and face tracking is being deployed by corporations.

In his 2007 book Emotionomics Dan Hill explains how the traditional focus group interview has a fundamental flaw: a person’s testimony is unreliable because they usually don't know how they actually feel. He advocated for augmenting market research surveys with facial expression analysis because your involuntary eye movements offer more insight into your true emotions than your words.

As Hill explains it, “facial coding helps us explore the SAY / FEEL gap: the discrepancy between what people say vs. what they actually feel.”

5. See the results

Our findings on the accuracy of facial expression and eye movement tracking tools.

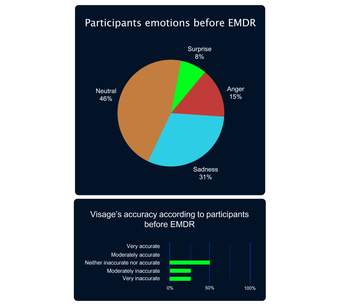

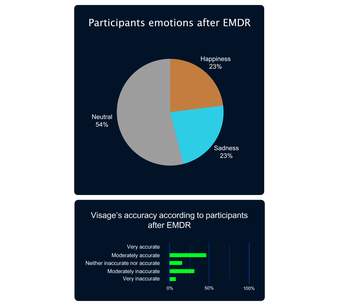

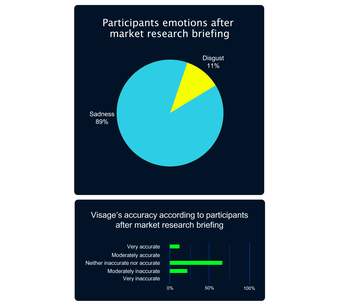

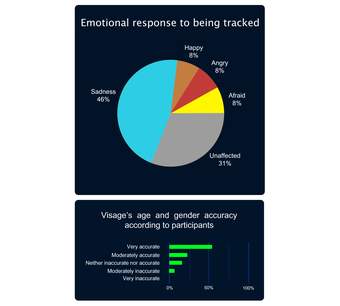

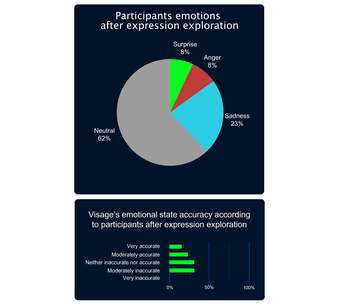

Participants in Laser’s study were intermittently polled about the accuracy of Visage’s emotional tracking software. They reported approximately 50% accuracy, but who is the more reliable judge of emotions, the person or the algorithm?

Backstory

Visage’s face tracking technology is derived from older 18th and 19th century classification systems for identifying emotions: the passions defined by René Descartes and Charles Darwin's Expression of Emotion in Man and Animal, 1872 . The more recent 20th Century studies were conducted by Dr. Paul Ekman at the University of California where he developed a program called FACS: Facial Action Coding System. Visage’s emotional expression classifications are largely based on Ekman’s work.